ComputerWorld has an article about Iran claiming to have two new supercomputers, fairly modest by Top500 standards, but lament the lack of details:

But Iran’s latest supercomputer announcement appears to have no details about the components used to build the systems. Iranian officials have not yet responded to request for details about them.

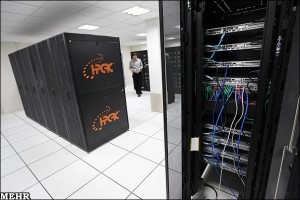

However, looking at the Iranian photo spread that they link to (which appears to be slashdot’ed now) the boxes in question are SuperMicro based systems (and so could be sourced from just about anywhere), with some of their 2U storage based boxes with heaps of disk and both 2U and some 1U boxes which are presumably the compute nodes. The odd thing is that they’re spaced out quite a bit in the rack, and the 1U systems have two fans on the left hand side (which indicates something unusual about the layout of the box). Here’s an image from that Iranian news story:

The nice thing is that it’s pretty easy to find a slew of boxes on SuperMicro’s website that matches the picture, it’s their 1U GPU node range which are dual GPU beasts, for example:

The problem is that this range goes back to a few years, for example in an nVidia presentation on “the worlds fastest 1U server” from 2009. HPC-wire describe these original nodes as:

Inside the SS6016T-GF Supermicro box, the two M1060 GPUs modules are on opposite sides of the server chassis in a mirror image configuration, where one is facing up, the other facing down, allowing the heat to be distributed more evenly. The NVIDIA M1060 part uses a passive heat sink, and is cooled in conjunction with the rest of the server, which contains a total of eight counter-rotating fans. Supermicro also builds a variant of this model, in which it uses a Tesla C1060 card in place of the M1060. The C1060 has the same technical specs as the M1060, the principle difference being that the C1060 has an active fan heat sink of its own. In both instances though, the servers require plenty of juice. Supermicro uses a 1,400 watt power supply to drive these CPU-GPU hybrids.

According to the HPC-Wire article the whole system (2 x CPUs, 2 x GPUs) is rated at about 2TF for single-precision FP. nVidia rate the M1060 card at 933 GF (SP) and 78 GF (DP) so I’d reckon for DP FP you’re looking at maybe 180 GF per node. But now that range includes ones with the newer M2070 Fermi GPUs which can do 1030 GF (SP) and 515 GF (DP) and would get you up to just over 2TF SP and (more importantly for Top500 rankings) over 1TF DP per node.

Now if we assume the claimed 89TF for the larger system is correct, that it is indeed double precision (to be valid for the Top500), they measured it with HPL and assume an efficiency of about 0.5 (which seems about what a top ranked GPU cluster achieves with Infiniband) we can play some number games. Numbers below invalidated by Jeff Johnson’s observation, see the “updated” section for more!

If we assume these are older M1060 GPUs then you are looking at something in the order of 1000 compute nodes to be able to get that number Rmax in Linpack – something of the order of 1MW. From the photos though I didn’t get the sense that it was that large, the way they spaced them out you’d need maybe 200 racks for the whole thing and that would have made an impressive photo (plus an awful lot of switches). Now if they’ve managed to get their hands on a the newer M2070 based nodes then you could be looking at maybe 200 nodes, a more reasonable 280KW and maybe 40 racks. But I still didn’t get the sense that the datacentre was that crowded…

So I’d guess that instead of actually running Linpack on the system they’ve just totted up the Rpeak’s then you would get away with 90 of them, so maybe 15 or 20 racks which feels to me more like the scale depicted in the images. That would still give them a system that would hit about 40TF and give it a respectable ranking around 250 on the Top500 IF they used Infiniband to connect it up. If it was gigabit ethernet then you’d be looking at maybe another 50% hit to its Rmax and that would drop it out of the Top500 altogether as you’d need at least 31TF to qualify in last Novembers list.

It’ll be certainly interesting to see what the system is if/when any more info emerges!

Update In the comments Jeff Johnson has pointed out the 2U boxes are actually 4-in-2U dual socket nodes, i.e. will likely have either 32 or 48 cores depending on whether they contain quad core or six core chips. You can see that best from this rear shot of a rack:

There are mostly 8 of those units in a rack (though rack at one end of the block has just 7, but there are 2 extra in a central rack below what may be an IB switch), so that’s 256 cores a rack if they’re quad core. There are 8 racks in a block and two blocks to a row so we’ve got 4,096 cores in that one row – or 6,144 if they’re 6 core chips!

The row with the GPU nodes is harder to make out we cannot see the whole row from any combination of the photos, but in the front view we can see 2 populated racks of a block of 5, with 8 to a rack. The first rear view shows that the block next to it also has at least 2 racks populated with 8 GPU nodes. The second rear view is handy because whilst it shows the same racks as the first it demonstrates that these two blocks coincide with a single block of traditional nodes, raising the possibility of another pair of blocks to pair with the other half of the row of traditional nodes.

Assuming M2070 GPUs then you’re looking at 8TF a rack, or 32TF for the row (assuming no other racks populated outside of our view). If the visible nodes are duplicated on the other side then you’re looking at 64TF.

If we assume that the Intel nodes have quad core Nehalem-EP 2.93Ghz then that would compare nicely to a Dell system at Saudi Aramco which is rated at an Rpeak of 48TF. Adding the 32TF for the visible GPU nodes gets us up to 80TF, which is close to their reported number, but still short (and still for Rpeak, not Rmax). So it’s likely that there are more GPU nodes, either in the 4 racks we cannot see into in the visible block of GPU racks or in another part of that row – or both! That would make a real life Top500 run of 89TF feasible, with Infiniband.

Be great to have a floor plan and parts list! 🙂

Update 2

Going through the Iranian news reports they say that the two computers are located at the Amirkabir University of Technology (AUT) in Tehran, and Esfahan University of Technology (in Esfahan). The one in Tehran is the smaller of the two, but the figures they give appear, umm, unreliable. It seems like the usual journalists-not-understanding-tech issue compounded by translation issues – so for instance you get things like:

The supercomputer of Amirkabir University of Technology with graphic power of 22000 billion operations per second is able to process 34000 billion operations per second with the speed of 40GB. […] The supercomputer manufactured in Isfahan University of Technology has calculation ability of 34000 billion operations per second and its Graphics Processing Units (GPU) are able to do more than 32 billion operations per 100 seconds.

and..

Amirkabir University’s supercomputer has a power of 34,000 billion operations per second, and a speed of 40 gigahertz. […] The other supercomputer project carried out by Esfahan University of Technology is among the world’s top 500 supercomputers.

So is the AUT (Tehran) system 22TF or 34TF? Could it be Rpeak 34TF and Rmax 22TF ? Is the Esfahan one 34TF (which would just creep onto the Top500) or higher ?

Unfortunately it’s the Tehran system in the photos, not the Esfahan one (the give away is the HPCRC on the racks). So my estimate of 80TF Rpeak for it could well give a measured 32TF if it’s using ethernet as the interconnect (or if the CPUs are a slower clock in the 2U nodes). Or perhaps the GPU nodes are part of something else ? That and slower clocked CPUs could bring the Rpeak down to 34TF..

Need more data!

Thease are simply supermicro GPU 1u servers like http://www.abmx.com/1u-nvidia-tesla-servers. Technically they can get close to Japanese effort: http://www.ornl.gov/info/ornlreview/v37_2_04/article02.shtml.

Just for referrence: Cray XT3 with 7K cores does 40 TF.

Pingback: Are the New Iranian Supers Powered by GPUs? | insideHPC.com

@q12mohad Yup, that’s precisely what I was saying about them. 🙂

If you look at all of the photos you will see the 2U boxes aren’t storage. They are Supermicro 2U/Quad four-node dual socket systems. If all pictured were populated with two cpus (both Intel and AMD current generation being at least quad-core) and a reasonable core/RAM ratio of 1GB/core then the 2U systems alone represent >3072 cores and 3TB RAM. Strung with DDR IB as well. Not a small or weak system. Likely the largest known in the area between Saudi Arabia and India.

Thanks Jeff, I’ve now redone my analysis based on that (and a closer look at the photos, I was working from memory last night as the Iranian news source was still Slashdot’ed).

Anyone can buy Tesla 🙂

@Jeff well spotted, I’ve not played with those Supermicro boxes before (densest we’ve got are our SGI XE340 two-in-1U). I’d been looking at the photo of the back of the rack and trying to make sense of it and it matches what you’re suggesting.

@hideMe what’s possible and what’s meant to be allowed are not necessarily the same thing.. 😉

Isfahan university of technology have many Tesla m1060 but AmirKabir university have ATI Radeon 5900.

The system pictured is the Tehran super, the reports indicate that it’s the Esfahan system which could get into the Top500. The Iranian reports themselves don’t appear to mention 89TF anywhere, and the numbers they give do seem to have suffered from the usual journalistic incomprehension!

http://nhpcc.iut.ac.ir/fckeditor/userfiles/image/banafshe/400.jpg

That link doesn’t appear to work I’m afraid! Just times out trying to connect..

http://www.freeimagehosting.net/image.php?6f790e068f.jpg

This is image of Isfahan center, 4U & 1U systems…..

http://translate.google.com/translate?u=http%3A%2F%2Fwww.mehrnews.com%2Ffa%2Fnewsdetail.aspx%3FNewsID%3D1264517&sl=fa&tl=en&hl=&ie=UTF-8

http://img.irna.ir/1389/13891211/30274985/30274985-1040470.jpg

@hideMe, @hossein: Thanks so much for those links! The 4U boxes are unfamiliar to me..

4U boxes are TYAN FT48B8812. http://www.tyan.com/product_SKU_spec.aspx?ProductType=BB&pid=434&SKU=600000186

support up to 48 core and up to 512GB RAM and gpu …. 🙂